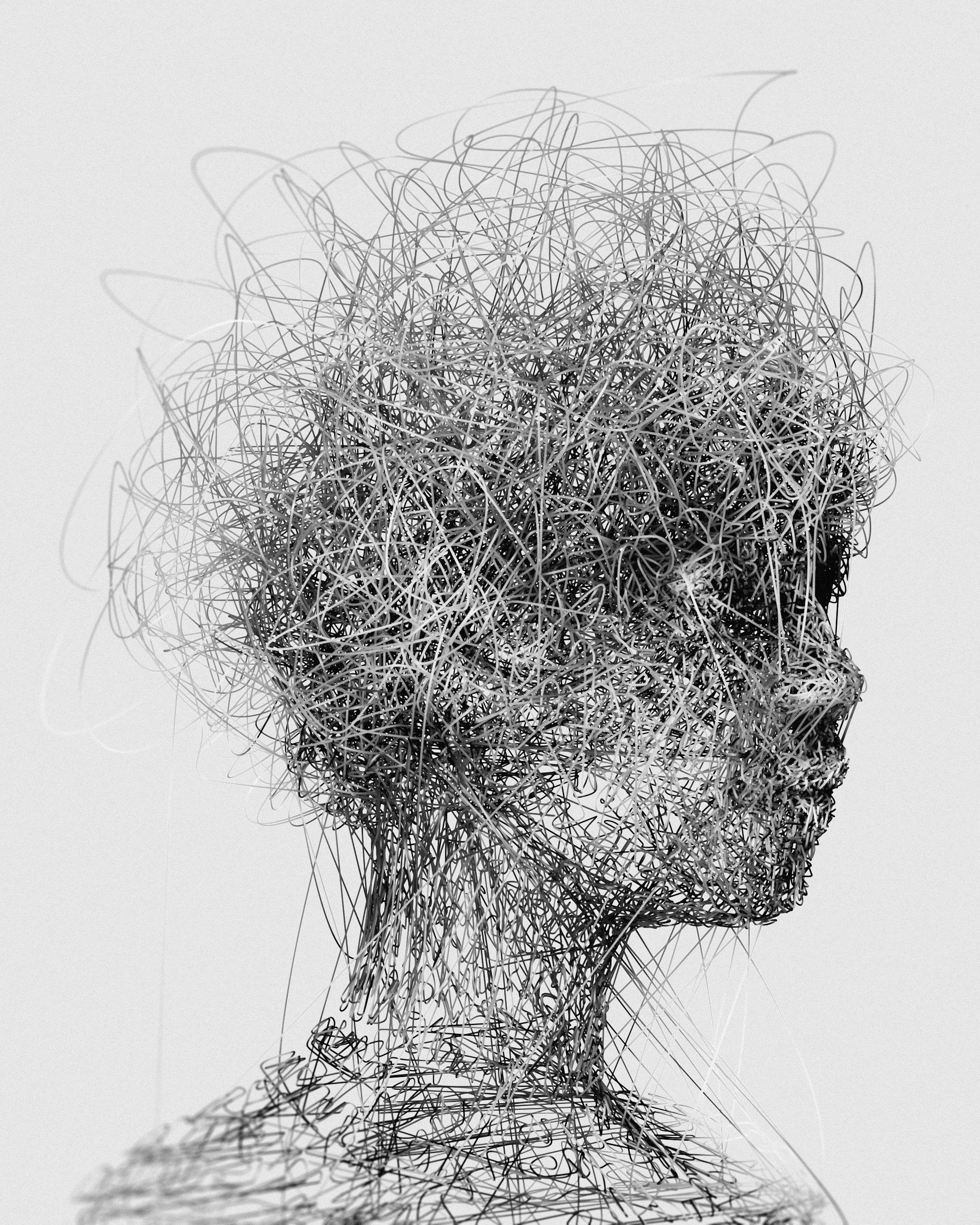

Do LLMs Have a Self Experience?

Thinking About LLMs as Another Mind

We think a lot about how to effectively apply LLMs to business use-cases. Compared to traditional software development and integration, it feels more like thinking about another mind, than a static, deterministic system. LLMs have a “virtual mind” of their own, and I find myself trying to put myself in its shoes, imagining how I would operate effectively under their special constraints and abilities.

It's a useful mental model, but what is the reality of an LLM's self-experience? If they aren't already, could any kind of artificial intelligence that runs on computers really have a self-experience at all?

Brains, Computers, and Possible Sources of Experience

Computers use electricity and simple physical components to perform calculations, store data, take input, and run in a loop. This is very different from the wet biological processes of our human brains. One view is that self-experience arises from complex information processing; another is that self-experience arises from specific physical processes in the brain.

Our upcoming course, From Rocks to Artificial Minds, will go deep on this.[1]

A Famous Argument

A famous argument against self-experience arising directly from information processing that exhibits human-like behavior goes as follows:

First, consider that symbolically, everything a computer is doing can also be done by a person with a pen, lots of paper, and calculator. Physically a computer mostly moves electrons around or encodes information into a physical medium, and we assign symbolic meaning to those actions (1s, 0s, up to words, images, etc.).

Next, realize that any LLM or other computer program, is simply a set of instructions for a computer to execute.

With that in mind, imagine a room where you have the program and tools mentioned above, and a Chinese speaker is sending messages into a ChatGPT like interface. Once a message comes in, you follow the instructions, writing down your progress as you go, until you are done forming the same response an LLM would give. You then send a reply message.

LLMs have already passed the Turing Test, and you can apply the same logic with a great simulation of a human brain. While you would be producing Chinese output that appears like you understand Chinese, you would not actually understand anything other than the math you are doing.

Extending the Argument to Self Experience

You can extend this argument for self-experience for a similar system. If the symbolic information processing is the source of the self-experience, then the system of you, the paper, and the instructions would need to have some emergent self-experience that is separate from your own.

A Few Common Objections

A few common objections are:

-

The information must be processed very quickly for self-experience to arise. This is analogous to how we don't experience relativistic or quantum effects in our day-to-day life because we are at the wrong scale. When you speed up the information processing, emergent properties would arise that wouldn't exist otherwise. But we can rework the experiment: if the person providing the input is on a spaceship at relativistic speeds, billions of years could pass in the system (allowing time for the calculations), while only milliseconds pass for the person on the spaceship. From their reference frame, the information processing is happening dramatically fast.

-

Self-experience is not well understood, so it's silly to use intuition to reason about it. The system may very well have a self-experience. But this seems highly improbable when you consider that we are assigning all of the meaning to the situation. Chinese characters are not inherently meaningful in themselves, and the calculations we're performing could be a simulation of a near-infinite number of possible systems that follow similarly complex rules. The person in the system could just as well be simulating the movements of strange ants, or gas molecules in a universe with very different laws of physics. For a given program, there are infinitely many things it could be considered to be doing—we are the only ones assigning any particular meaning to it. So why would it result in an emergent property of just one interpretation?

Note: You cannot use the above argument against the brain if you are looking at the brain as a physical system instead of something that symbolically manipulates data.

Where This Leaves Us

At Build21, we're convinced that computers cannot have a self-experience—so don't worry too much the next time you curse at an AI chatbot.

[1] From Rocks to Artificial Minds: All the Context You Need to be a Software Engineer or Vibe Coder